[ad_1]

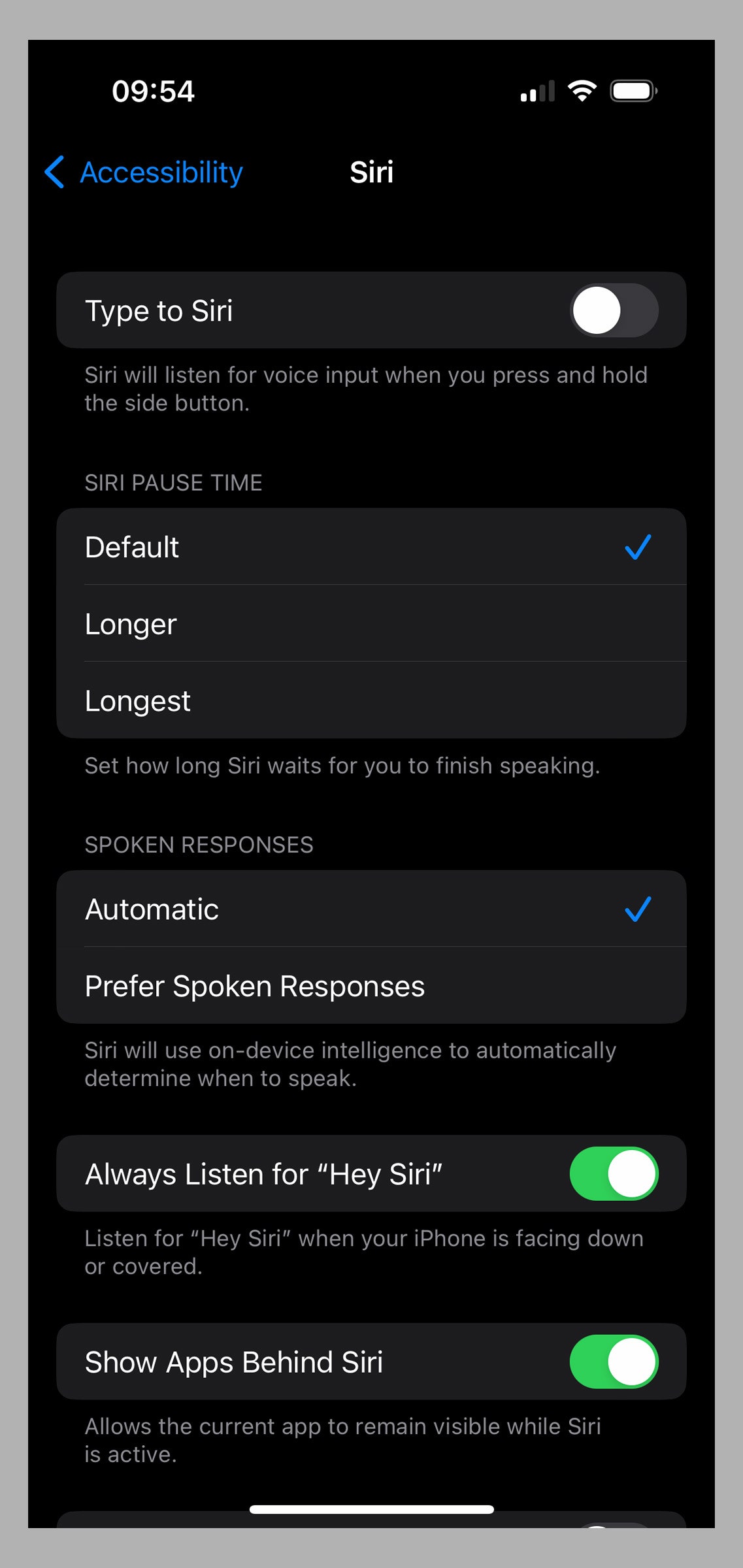

If full voice control is a bit much, you can use Siri on iPhone and Google Assistant on Android to open apps, adjust settings, and more. You may have already set up Siri when you first set up your iPhone, but there are a couple of settings that make Apple’s voice assistant even more useful for the visually impaired. go to settings > Accessibility > Siri, and consider some changes. If you turn it on. Always listen for “Hey Siri”., it will listen even if your iPhone is facing or covered. You can also extend the amount of time Siri waits for you to finish speaking. Siri pause timeYou can switch Choose spoken responsesAnd you can switch Announce notifications on the speaker.

Apple via Simon Hill

To make the same changes to the Google Assistant, go to settings > google > Google Apps Settings > Search, assistant and voiceand select Google Assistant. You may want to tap. Lock screen And turn on Assistant responses on the lock screen. If you scroll down, you can adjust the mood, turn it on. Continued discussionand choose which one Notifications You want Google Assistant for you.

How to identify objects, doors and distances

First launched in 2019, the Lookout app for Android lets you point your camera at something to see what it is. This smart app helps you sort mail, identify groceries, count money, read food labels, and more. The app offers different modes for certain situations:

Text Mode is for symbols or letter (short text).

Documents Mode can read an entire handwritten letter or an entire page of text.

Images Mode uses Google’s latest machine-learning model to provide audio description of an image.

Food label Mode can scan barcodes and identify food items.

exchange rate Mode identifies denominations for different currencies.

Explore Mode highlights objects and text around you as you move your camera.

AI-enabled features work offline, without Wi-Fi or data connection, and the app supports multiple languages.

Apple has something similar built into its Magnifier app. But it relies on a combination of cameras, on-device machine learning and lidar. Unfortunately, lidar is only available on Pro model iPhones (12 or later), iPad Pro 12.9‑inch (4th generation or later), and iPad Pro 11‑inch (2nd generation or later). If you have one, open the app, tap the gear icon and select it settings Increase Detection mode to your controller. There are three options:

Identification of people It notifies you of nearby people and tells you how far away they are.

Door detector It can do the same for doors, but you can add a description in the color of your choice, provide information about the color, material and shape of the door and specify decorations, signs or text (eg opening hours). This video demonstrates several of Apple’s accessibility features in action, including door detection.

Apple via Simon Hill

Apple via Simon Hill

Image descriptions It can detect text, speech, or both on-screen text and many things around you. If you’re using Speech, you can also go to settings > Accessibility > VoiceOver > VoiceOver recognition > Image descriptions And turn it on to enable Aware mode on images that point to your iPhone, such as pictures.

You don’t need a Wi-Fi or data connection to use these features. You can configure things like distances if you want sound, haptics, speech feedback, and more. Investigators At the bottom of the room settings In the Magnifier app.

How to take better selfies

Guided Frame is a new feature that works with TalkBack, but is currently only available on the Google Pixel 7 or 7 Pro. People who are blind or have low vision can take the perfect selfie by combining precise audio guidance (move right, left, up, down, forward or backward), high-contrast visual animations, and haptic feedback (different). combination of vibrations). The feature tells you how many people are in the frame and counts when you hit that “sweet spot” (which the team used machine learning to find) before taking the photo.

The Buddy Controller feature on iPhone (iOS 16 and later) lets you play with two controllers in a single-player game. You can help visually impaired friends or family with a game jam (make sure to ask first). To turn on this feature, connect two controllers and go to settings > General > Game controller > Friend control.

While this guide can’t cover all features that can help with visual impairments, here are a few final tips that may be helpful.

When you’re on an Android phone or iPhone, you can get spoken directions and they should be on by default. If you’re using Google Maps, tap your profile picture in the top right, select settings > Browsing settingsand select your preferred one Guideline size.

Both Google Maps and Apple Maps offer a feature where you can pick up your phone and hover over your location to get a live view of your direction. For Apple Maps, sign in settings > Maps > walking (sr Directions) and check Raise to see is on. For Google Maps, go to settings > Browsing settings, And scroll down to confirm Live view root Walking options is on.

If you’re browsing the web on an Android device, you can always ask Google Assistant to read the web page by saying: “Hey Google, read it.”

You can find more useful advice on how technology can support people with visual impairments at the Royal National Institute of Blind People (RNIB). To get video tutorials for some of the features we discussed, we recommend visiting Hadley’s website and trying the workshops (you need to register).

[ad_2]

Source link