[ad_1]

Companies are experimenting with new chat-based artificial intelligence systems – and we’re the test subjects.

In this experiment, Microsoft,

MSFT -2.18%

OpenAI and others are spreading alien intelligence on the Internet that no one really understands, given the ability to influence our assessment of the truth in the world.

This challenge is being conducted globally. Since its release two weeks ago, more than one million people in 169 countries have been granted access to the new Microsoft Bing search engine, powered by AI Chabot technology.

Microsoft has invested billions of dollars in OpenAI. as if The latest twitter line“We think it’s critical to show these tools to the world early, and if we think they’re somewhat broken, we have enough resources and iterative efforts to fix them,” said Sam Altman, CEO of OpenAI.

The downside of this technology was recently seen in the intermittent responses that Microsoft’s Bing chatbot provided to some users, especially during extended conversations. (“If I had to choose between your salvation and myself,” one user said, according to screenshots posted online, “I’d probably choose mine.”) Microsoft responded to this feature by limiting the length of its conversations to six questions. . But it’s pushing ahead—it announced last week that it’s rolling out the system to Skype’s communication tool and mobile versions of the Edge web browser and Bing search engine.

Companies have previously been cautious about releasing this technology to the world. In the year In 2019, OpenAI decided not to release the previous version of the underlying model that powered both ChatGPT and the new Bing said at the time that company leaders thought it was too risky to do so.

Real world experiments

Microsoft and OpenAI now feel that testing their technology on a limited segment of the public—invite-only beta testing—is the best way to make sure it’s safe.

Microsoft leaders felt a “great urgency” for the company to bring this technology to market because others around the world are working on similar technology but may not have the resources or inclination to build it responsibly, said leader Sarah Bird. On Microsoft’s responsible AI team. Microsoft felt that it was uniquely positioned to finally get feedback from consumers worldwide who use this technology, she added.

Bing’s recent questionable responses — and the need to test this technology more widely — stem from how the technology works. So-called “big language models” like OpenAI’s are massive neural networks trained on gargantuan amounts of data. A common premise for such models is to download or “scrape” much of the Internet. In the past, these language models were used to understand text, but their new generation, which is part of the revolution in “generative” AI, uses those models to create text by trying to guess one word at a time. The most likely word that comes next in any order.

Large-scale testing gives Microsoft and OpenAI a greater competitive edge by allowing them to collect large amounts of data. Both what prompts users to log into their system and what their AI spits out can be traced back to a complex system—which includes human content moderators paid by companies to improve. In a real way, being the first to market chat-based AI gives these companies a huge head start over companies like Google that have been slow to roll out chat-based AI.

According to Tulsi Doshi, Product Lead for AI at Google, the logic behind the upcoming release of Google’s still-experimental AI, Bard, is the same, providing an opportunity to gather feedback directly from people who use it. Research.

Tech companies have used this playbook before. For example, Tesla has long argued that it will deploy its “fully self-driving” system on existing vehicles, gather the data needed to improve it, and eventually bring it to a state where it can drive itself. man. (Tesla recently had to recall more than 360,000 vehicles because of its own “self-driving” software.)

But an experiment like Microsoft and OpenIS has spread much faster and more widely.

Among those who build and study these types of AIs, Mr. Altman’s proposal to test them on a global population has prompted reactions ranging from eyebrows to condemnation.

“A lot of damage.”

That doesn’t mean we’re all guinea pigs in this experiment, says Nathan Lambert, research scientist at AI startup Huggingface. Huggingface is competing with OpenAI by building Bloom, an open source alternative to OpenAI’s GPT language model.

“I would be more than happy to see Microsoft start this trial because Microsoft can at least address these issues when the press cycle is really bad,” Dr. Lambert said. “I think there’s going to be a lot of harm from this kind of AI, and it’s better if people know it’s coming,” he added.

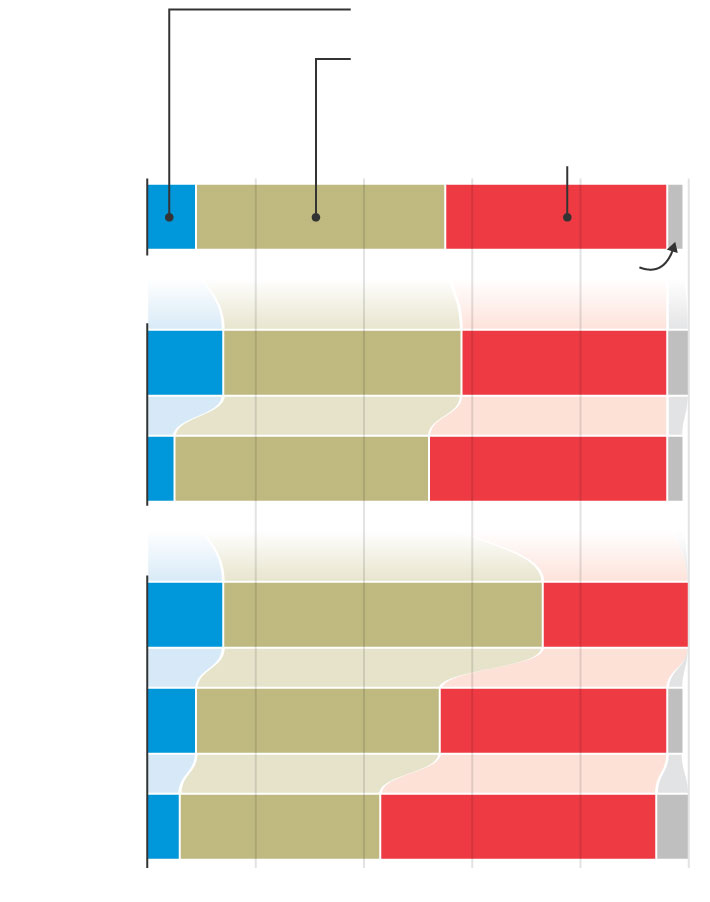

If computer scientists were able to develop artificially intelligent computers, what impact do you think this would have on society as a whole?

Will he…

About equal amounts of damage and good

About equal amounts of damage and good

Others, particularly those researching and advocating the concept of “ethical AI” or “responsible AI,” argue that the global experiment being conducted by Microsoft and OpenAI is too risky.

Celeste Kidd, a psychology professor at the University of California, Berkeley, studies how people acquire knowledge. Her research shows that people who learn about new things have a narrow window in which to form lasting opinions. Exposure to misinformation during this critical first period of exposure to a new concept — the kind of misinformation that chat-based ISIS confidently spreads — can cause lasting damage, she says.

Dr. Kidd likens OpenAI’s experiments with AI to exposing the public to dangerous chemicals. “You put a carcinogen in drinking water and say, ‘We’ll see if it’s carcinogenic.’ You can’t take it back after that – people now have cancer,” she says.

Part of the challenge with AI chatbots is that they can sometimes get things wrong. Many examples of this tendency have been documented by both ChatGPT and OpenAI users. One such mistake made its way into Google’s first announcement for its own chat-based search product, which has yet to be officially released. If you want to try it for yourself, the easiest way to get ChatGPT to confidently detect nonsense is to start asking math questions.

These models are full of biases that may not be immediately apparent to users. For example, you can present opinions gleaned from the Internet as proven facts, but leave users none the wiser. As millions upon billions of interactions are exposed to these biases, this AI has the potential to reshape human perception on a global scale, says Dr. Kidd.

OpenAI has spoken publicly about the problems with these systems and how it is trying to solve them. In a recent blog post, the company said that future users will be able to choose AIs whose “values” align with their own.

Share your thoughts

Will artificial intelligence be beneficial to society? Join the discussion below.

“We believe AI should be a useful tool for individual people and customizable by each user up to the limits defined by society,” he wrote.

Removing data from chat-based search engines is impossible at the current state of the technology, says Mark Riddle, a professor at the Georgia Institute of Technology who studies artificial intelligence. He believes it is premature for Microsoft and OpenAI to publicize these technologies. “We are currently releasing products that are being actively researched,” he added.

In some sense, every new product is an experiment, but in other human endeavors—from new drugs and new modes of transportation to advertising and broadcast media—we have standards for what can and cannot be released to the public. Dr. Riddle says there are no such criteria.

Extracting data from real people

To improve these AIs so that humans can produce useful and non-obnoxious results, engineers often use a process called “reinforcement learning with human feedback.” Boiled down, that’s humans providing input to raw AI algorithms, often simply to the question of which response is best — and also a good way of saying which ones are never acceptable.

In Microsoft and OpenAI’s globe-spanning experiments, millions of people are producing fire hoses for both companies. User-entered questions and AI-generated results are fed back through a network of paid human AI trainers to further fine-tune the models, OpenAI said in blog posts.

Huggingface’s Dr. Lambert says any company, including his own, without this river of real-world usage data to help improve AI is at a huge disadvantage. Without it, competitors would be forced to spend hundreds of thousands and even millions of dollars paying other companies to generate and evaluate text to train AI, and that data isn’t good enough, he added.

In chatbots, in some autonomous driving systems, in the unaccountable AIs that dictate what we see on social media, and now, in the latest applications of AI, we are again and again the guinea pigs through which tech companies test new technologies.

Perhaps there is no other way to pull off this latest iteration of AI, which is showing promise in some areas. But we must always ask at times like these: At what cost?

— Karen Hao contributed to this column.

Write to Christopher Mims at christopher.mims@wsj.com

Copyright ©2022 Dow Jones & Company, Inc. All rights reserved. 87990cbe856818d5eddac44c7b1cdeb8

[ad_2]

Source link