[ad_1]

Artificial intelligence may one day be used to create lifelike cyborgs or other forms of human imagination. For now, Ingo Stork is using the technology to help restaurant chains waste less food and do more with fewer workers.

Dr. Stork is the co-founder of Precitaste, which uses AI-based sensors and algorithms to predict how much food people are ordering at any given time, and make sure it’s being prepared on time. .

The idea — to reduce waste — Dr. Stork came up with after visiting a quick-service kitchen one afternoon a few years ago and watching a cook burn 30 burger patties, throwing them all away when no one was around. appeared to eat them. Why should this chef follow that day’s schedule instead of the slow one written in anticipation of a normal day at the restaurant?

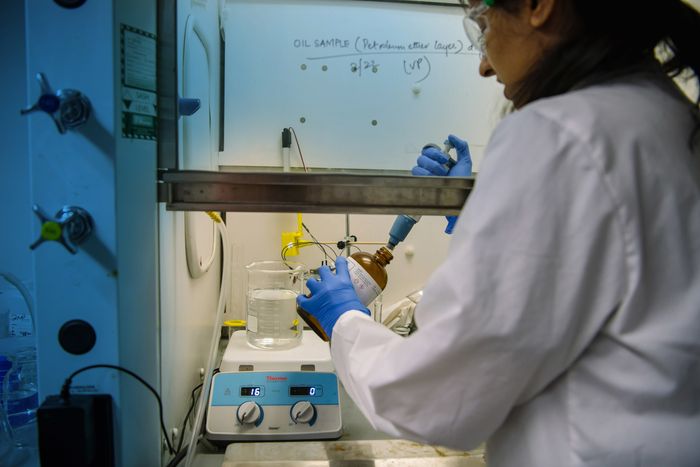

A Phuc Labs engineer prepares a sample to run in the startup’s AI-based screening system, which uses machine-vision algorithms to identify valuable metal particles in e-waste.

Photo:

Justin Salem Meyer/Phuc Labs

“Each burger is a 50-mile drive in terms of CO2 emissions,” he says, referring to the energy required to raise cows and eventually turn them into burgers. “Think of all the logistics of getting there, only to have it all go to waste and get rid of it.”

Using AI tools to reduce waste and increase productivity at fast food joints is hardly the stuff of science fiction. It’s not flashy enough for scientific writing like some of the artificial intelligences that have been getting a lot of attention lately, like DALL-E, which can create intelligent images based on text suggestions, or GPT-3, a text generation software. Papers about himself. And it’s not as likely to make headlines as Google’s LAMDA chatbot, which one of the company’s engineers described as sentient that could offer such human conversations — something the company flatly denies.

But, with few exceptions, these headline-grabbing systems aren’t making a material impact on anyone’s bottom line yet.

The AI systems that are most important to companies these days are more humble. If they were human, they’d be wearing hard hats and making cameos on the reality show “Dirty Jobs.”

Keep it simple without mercy

When entrepreneur Phuc Vinh Trung found himself locked in his Massachusetts home due to the Covid-19 lockdowns, he came up with a simple idea. What if you saw contaminants in a liquid stream and washed them away one by one?

That led to Fook Labs, a startup working on a new way to use AI to make e-waste recycling more efficient.

The system starts with the shredded waste left over after recycling old electronic devices that shred batteries and other e-waste. Typically, this waste is handled by a variety of techniques, including chemical separation. Instead, Phuc Labs suspends the particles in water, then circulates the resulting liquid through tiny tubes, where a camera captures the passage at 100 frames per second.

Each frame is analyzed by a computer running machine-vision algorithms, which are trained to distinguish between metal particles and other objects valuable to recyclers. As a particle moves toward the end of the tube, a small, powerful jet of air is fired against the stream, turning the “ankle” of water containing the particle into a reservoir. The water is cycled through the system repeatedly until almost all of the precious metals are separated.

Phuc Labs’ “vision valve” technology is still in its infancy, but the company is working on a pilot program with IRI, which uses e-waste bins in the Philippines, said IRI president Lee Echiverri.

This type of filtering may not be possible without AI, but it’s not fancy AI. Machine-vision systems are perhaps the best-studied flavor of AI, and have been around for decades. They are used in everything from the facial recognition camera in your cell phone to autonomous driving systems to the missiles that take out Russian tanks in Ukraine.

Identifying tiny metal particles in such shredded e-waste is like a simple game for an artificial intelligence system. Getting them out is a big challenge.

Photo:

Justin Salem Meyer/Phuc Labs

Mr. Truong’s team was able to build one of the first versions of their system, the Roboflow, using off-the-shelf computer vision. We trained it by manually identifying a few hundred sub-images—by drawing and labeling boxes around particles—and the RoboFlow software did the rest.

While the AI of Phuc Labs’ detection system is unique, the system only works because it asks AI at its heart – “Is this metal or not?” Basically, his engineers are creating a simple game for AI to learn, and games like chess and Go are things AI has already been good at, Mr. Truong said.

In many other real-world AI applications, engineers have found that trying to do a little with AI ultimately leads to success. A prime example of this is autonomous driving systems, which have previously fallen short of their promise of full autonomy, but are finding success in navigating confined and unforgiving environments on trains, ocean-going ships, and long distances. – Trucks.

Specialization fosters flexibility.

Each fast-food restaurant chain operated by Dr. Stork’s New York City-based Precitaste presents new challenges for its engineers and AI-powered restaurant-management systems.

“Each food chain has its own menu, operations, equipment and way of handling things,” he says. Wall-mounted cameras with machine vision that can track orders from the time the raw materials leave the refrigerator until they are ready to serve to the customer, for example, should be placed separately. And the number of stages of preparation can vary greatly by restaurant.

“Today’s artificial intelligence has no intelligence.“

PreciTaste said it could not say which chains are considering the technology. But it is working with commercial and kitchen manufacturer Franke’s to test the technology in a few local fast-food and fast-casual restaurants, said Greg Richards, the company’s vice president of business development. (Frankie’s has been a McDonald’s supplier since the 1970s..

)

For the system to work, deep-sensing cameras must be trained to detect how much of an ingredient—say, rice—is in a prep tray. Knowing when to fill up depends on demand, which in turn depends on factors including the weather and local holidays that can determine where people go out to eat and what they order. All of these and more feed into the same predictive algorithms that help retailers like Amazon manage their logistics networks.

Today’s AI systems lack intuition, can misbehave when faced with unexpected events, and have little ability to transfer “learned” knowledge from one task to similar situations. In this way, today’s artificial intelligence has almost no intelligence at all; As one AI pioneer put it, it’s just “complex information management.”

The result is that engineers and data scientists need to be more hands-on, including planning, hardware engineering, and writing software for their poor AI. All this to build a scaffolding on which an AI can be trained to perform as narrowly defined tasks as possible.

It may not always be like this

They are known as “baseline models,” such as DALL-E, GPT-3 and LaMDA, said Oren Etzioni, executive director of AI at the Allen Institute. Currently, they are mostly research projects. But one day, such systems may be flexible enough to solve problems that today remain the preserve of human intelligence, he added.

Already, these AIs are beginning to branch out and perform a wide range of tasks. One way this is happening is because base models have more data and are equally capable of modeling or coding. For example, genre fiction writers can use GPT-3-based software to quickly export direct-to-Kindle novels. and programmers who use MicrosoftS

Copilot is more efficient when the system automatically completes the lines of code you write. The co-pilot has a shared lineage with the GPT-3, and like its cousin who writes marketing copy, fiction, and essays, it’s not perfect.

While we wait for these foundational models to find further applications outside of R&D labs, research on related systems has proven valuable in getting us there.

Gong, a San Francisco-based startup of the same name, is a cloud-based system that records and analyzes the communication channels used by each sales team. This includes phone calls, meetings, emails, transcripts, and more. It then analyzes all of those relationships, and makes recommendations, so salespeople can close more deals. These suggestions range from words and phrases to landing successful sales pitches on how much to talk during a pitch—often less.

Share your thoughts

What real-world business applications can you imagine for AI? Join the discussion below.

Gong works in dozens of languages. For years, this meant that every time the company wanted to update any of its AI models to get better at transcribing or analyzing speech, it had to do so for each language, and sometimes even dialect. According to Amit Bendov, CEO of Gong, this was a huge undertaking.

Then, in 2019, Meta AI, the research arm of Facebook’s parent Meta Platform, released a system called Wav2vec that uses a new algorithm to quickly learn any language. Using this open source code allowed Gong engineers to build a single system that parses all the languages and dialects that Gong supports, Mr. Bendov said. Gong now uses a single polyglot AI model, continuously updated, to understand everything the company’s system does.

Despite this departure from researchers at Meta, Gong still uses a custom-built speech-recognition system trained on thousands of hours of recorded audio and human-written transcriptions. (This includes recordings of client phone calls, “Seinfeld” episodes, and fan-transcribed scripts for them.)

Gong’s AI for relatively narrow tasks, such as speech recognition, and the way its engineers built custom systems to do it, incorporate the same workaday AI principles as Fook Labs’ waste-filtering technology and PreciTaste’s restaurant management systems.

Someday, big, fancy models that attract attention may apply to this company and other operations—but not yet. Getting there can take a big leap, such as giving AI a shared understanding, including knowledge about the real world, that can make sense of all the data it inputs.

“The funny thing is, Gong doesn’t know what an iPad is or our customer’s business,” Mr. Bendov said. “When you’re successful, he knows, ‘That’s what it’s all about.'”

Subscribe to our weekly newsletter for more WSJ Technology analysis, reviews, advice and headlines.

Write Christopher Mims at christopher.mims@wsj.com

Copyright ©2022 Dow Jones & Company, Inc. All rights reserved. 87990cbe856818d5eddac44c7b1cdeb8

[ad_2]

Source link