[ad_1]

The most sophisticated AI systems today are capable of impressive feats, from directing cars through city streets to writing human-like prose. But they share a common bottleneck: hardware. Developing systems on the bleeding edge often requires a huge amount of computing power. For example, creating DeepMind’s protein structure-predicting AlphaFold took a cluster of hundreds of GPUs. Further underlining the challenge, one source estimates that developing AI startup OpenAI’s language-generating GPT-3 system using a single GPU would’ve taken 355 years.

New techniques and chips designed to accelerate certain aspects of AI system development promise to (and, indeed, already have) cut hardware requirements. But developing with these techniques calls for expertise that can be tough for smaller companies to come by. At least, that’s the assertion of Varun Mohan and Douglas Chen, the co-founders of infrastructure startup Exafunction. Emerging from stealth today, Exafunction is developing a platform to abstract away the complexity of using hardware to train AI systems.

“Improvements [in AI] are often underpinned by large increases in… computational complexity. As a consequence, companies are forced to make large investments in hardware to realize the benefits of deep learning. This is very difficult because the technology is improving so rapidly, and the workload size quickly increases as deep learning proves value within a company, ”Chen told TechCrunch in an email interview. “The specialized accelerator chips necessary to run deep learning computations at scale are scarce. Efficiently using these chips also requires esoteric knowledge uncommon among deep learning practitioners. ”

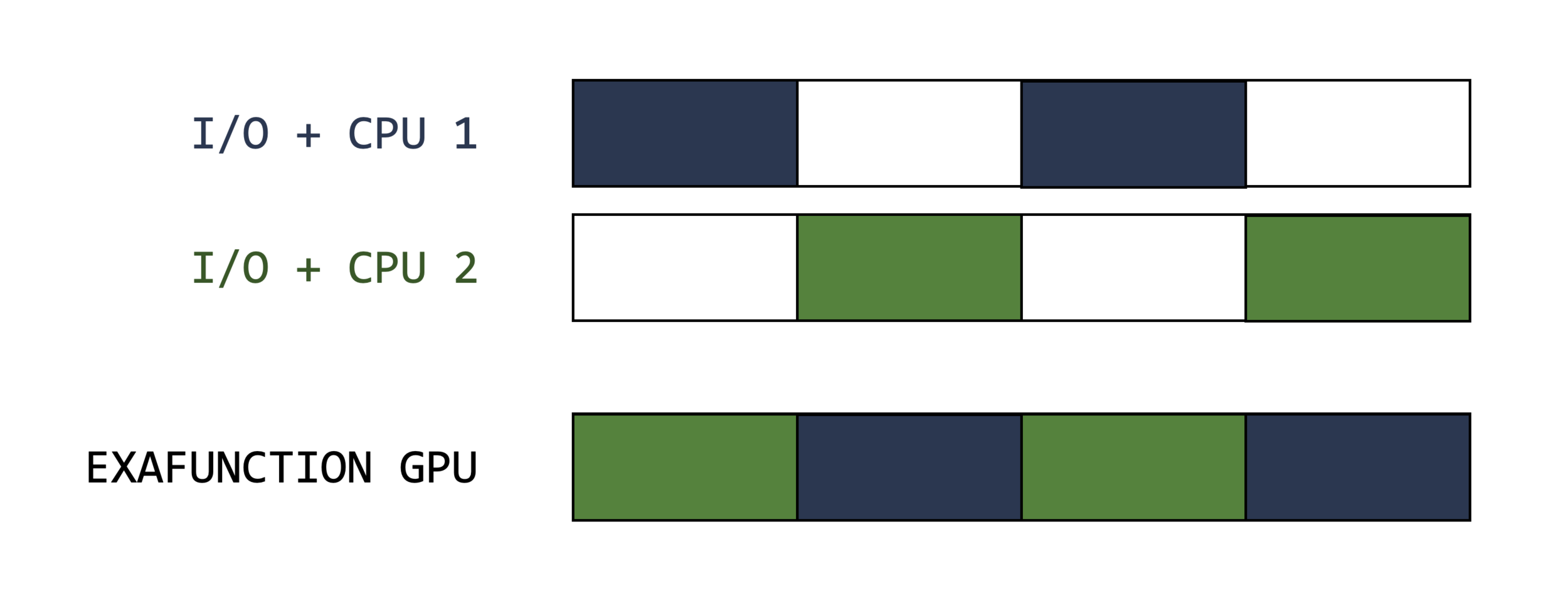

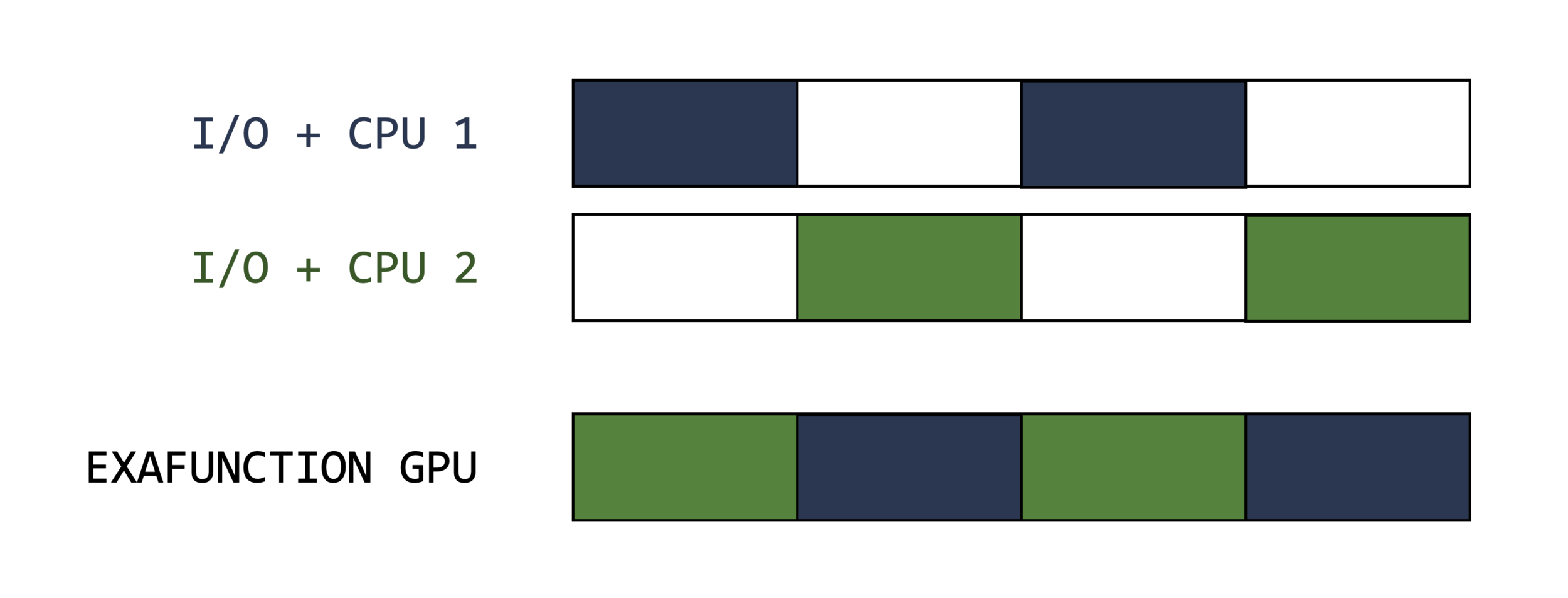

With $ 28 million in venture capital, $ 25 million of which came from a Series A round led by Greenoaks with participation from Founders Fund, Exafunction aims to address what it sees as the symptom of the expertise shortage in AI: idle hardware. GPUs and the aforementioned specialized chips used to “train” AI systems – ie, feed the data that the systems can use to make predictions – are frequently underutilized. Because they complete some AI workloads so quickly, they sit idle while they wait for other components of the hardware stack, like processors and memory, to catch up.

Lukas Beiwald, the founder of AI development platform Weights and Biases, reports that nearly a third of his company’s customers average less than 15% GPU utilization. Meanwhile, in a 2021 survey commissioned by Run: AI, which competes with Exafunction, just 17% of companies said that they were able to achieve “high utilization” of their AI resources while 22% said that their infrastructure mostly sits idle.

The costs add up. According to Run: AI, 38% of companies had an annual budget for AI infrastructure – including hardware, software, and cloud fees – exceeding $ 1 million as of October 2021. OpenAI is estimated to have spent $ 4.6 million training GPT-3.

“Most companies operating in deep learning go into business so they can focus on their core technology, not to spend their time and bandwidth worrying about optimizing resources,” Mohan said via email. “We believe there is no meaningful competitor that addresses the problem that we’re focused on, namely, abstracting away the challenges of managing accelerated hardware like GPUs while delivering superior performance to customers.”

Seed of an idea

Prior to co-founding Exafunction, Chen was a software engineer at Facebook, where he helped to build the tooling for devices like the Oculus Quest. Mohan was a tech lead at autonomous delivery startup Nuro responsible for managing the company’s autonomy infrastructure teams.

“As our deep learning workloads [at Nuro] grew in complexity and demandingness, it became apparent that there was no clear solution to scale our hardware accordingly, ”Mohan said. “Simulation is a weird problem. Perhaps paradoxically, as your software improves, you need to simulate even more iterations in order to find corner cases. The better your product, the harder you have to search to find fallibilities. We learned how difficult this was the hard way and spent thousands of engineering hours trying to squeeze more performance out of the resources we had. ”

Image Credits: Exafunction

Exafunction customers connect to the company’s managed service or deploy Exafunction’s software in a Kubernetes cluster. The technologyhttps: //www.exafunction.com/ dynamically allocates resources, moving computation onto “cost-effective hardware” such as spot instances when available.

Mohan and Chen demurred when asked about the Exafunction platform’s inner workings, preferring to keep those details under wraps for now. But they explained that, at a high level, Exafunction leverages virtualization to run AI workloads even with limited hardware availability, ostensibly leading to better utilization rates while lowering costs.

Exafunction’s reticence to reveal information about its technology – including whether it supports cloud-hosted accelerator chips like Google’s tensor processing units (TPUs) – is cause for some concern. But to allay doubts, Mohan, without naming names, said that Exafunction is already managing GPUs for “some of the most sophisticated autonomous vehicle companies and organizations at the cutting edge of computer vision.”

Exafunction provides a platform that decouples workloads from acceleration hardware like GPUs, ensuring maximally efficient utilization – lowering costs, accelerating performance, and allowing companies to fully benefit from f hardware… [The] platform lets teams consolidate their work on a single platform, without the

challenges of stitching together a disparate set of software libraries, ”he added. “We expect that [Exafunction’s product] will be profoundly market-enabling, doing for deep learning what AWS did for cloud computing. ”

Growing market

Mohan might have grandiose plans for Exafunction, but the startup isn’t the only one applying the concept of “intelligent” infrastructure allocation to AI workloads. Beyond Run: AI – whose product also creates an abstraction layer to optimize AI workloads – Grid.ai offers software that allows data scientists to train AI models across hardware in parallel. For its part, Nvidia sells AI Enterprisea suite of tools and frameworks that lets companies virtualize AI workloads on Nvidia-certified servers.

But Mohan and Chen see a massive addressable market despite the crowdedness. In conversation, they positioned Exafunction’s subscription-based platform not only as a way to bring down barriers to AI development but to enable companies facing supply chain constraints to “unlock more value” from hardware on hand. (In recent years, for a range of different reasons, GPUs have become hot commodities.) There’s always the cloud, but, to Mohan’s and Chen’s point, it can drive up costs. One estimate found that training an AI model using on-premises hardware is up to 6.5x cheaper than the least costly cloud-based alternative.

“While deep learning has virtually endless applications, two of the ones we’re most excited about are autonomous vehicle simulation and video inference at scale,” Mohan said. “Simulation lies at the heart of all software development and validation in the autonomous vehicle industry… Deep learning has also led to exceptional progress in automated video processing, with applications across a diverse range of industries. [But] Although GPUs are essential to autonomous vehicle companies, their hardware is frequently underutilized, despite their price and scarcity. [Computer vision applications are] also computationally demanding, [because] each new video stream effectively represents a firehose of data – with each camera outputting millions of frames per day. ”

Mohan and Chen say that the capital from the Series A will be put toward expanding Exafunction’s team and “deepening” the product. The company will also invest in optimizing AI system runtimes “for the most latency-sensitive applications” (eg, autonomous driving and computer vision).

“While currently we are a strong and nimble team focused primarily on engineering, we expect to rapidly build the size and capabilities of our org in 2022,” Mohan said. Across virtually every industry, it is clear that as workloads grow more complex (and a growing number of companies wish to leverage deep-learning insights), demand for compute is vastly exceeding [supply]. While the pandemic has highlighted these concerns, this phenomenon, and its related bottlenecks, is poised to grow more acute in the years to come, especially as cutting-edge models become exponentially more demanding. ”

[ad_2]

Source link