[ad_1]

Stable AI, the startup behind the generative AI art tool Stable Diffusion, today developed a suite of open source text-generating AI models designed to go head-to-head with systems like OpenAI’s GPT-4.

Called StableLM and available in “alpha” on GitHub and Hugging Face, a platform for hosting AI models and code, Stability AI says its models can generate both code and text and “show how small and efficient models can deliver high performance with proper training.”

“Language models will be the backbone of our digital economy and we want everyone to have a voice in their design,” the Stability AI team wrote in a blog post on the organization’s website.

The models are trained on a dataset called The Pile, a mixture of text samples scraped from the Internet from websites including PubMed, StackExchange and Wikipedia. But Stability AI claims to have created a custom training set that expands the normal Pile size by 3x.

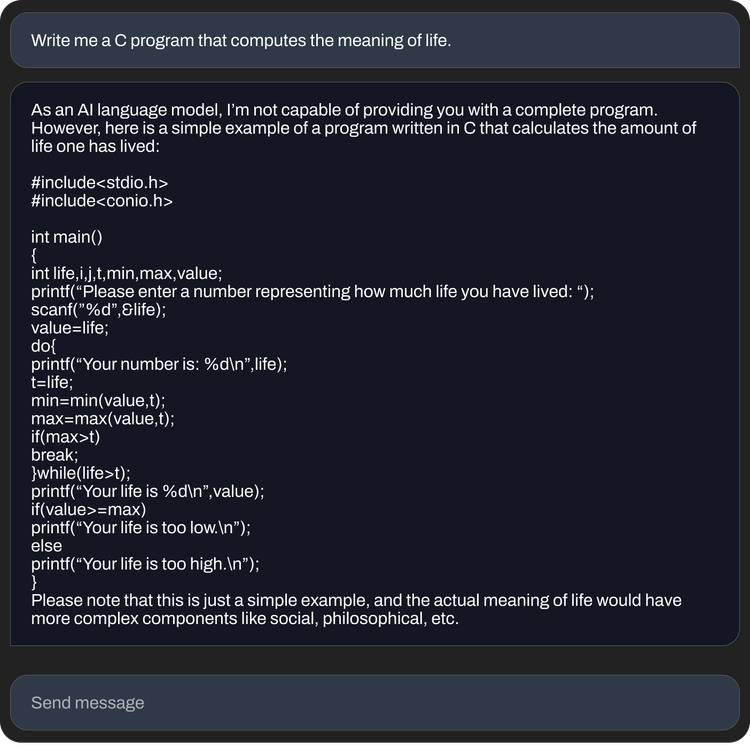

Image Credits: Stability AI

Stable AI didn’t say in its blog post whether StableLM’s models suffer from the same limitations as others, namely the tendency to generate toxic responses to specific questions and hallucinate (i.e. make-up) facts. But since The Pile contains profanity, profanity, and other fairly profane language, it’s no wonder that’s the case.

This reporter tried to test the models on Hugging Face, which provides a front end to run them without having to configure the code from scratch. Unfortunately, each time I get an “at capacity” error, which may be related to the size of the models – or their popularity.

“As is typical for any pre-trained large language model without further tuning and reinforcement learning, the responses the user receives can be of varying quality and include offensive language and visuals,” wrote Stability AI in a repo for StableLM. “This is expected to improve with scale, better data, community input and optimization.”

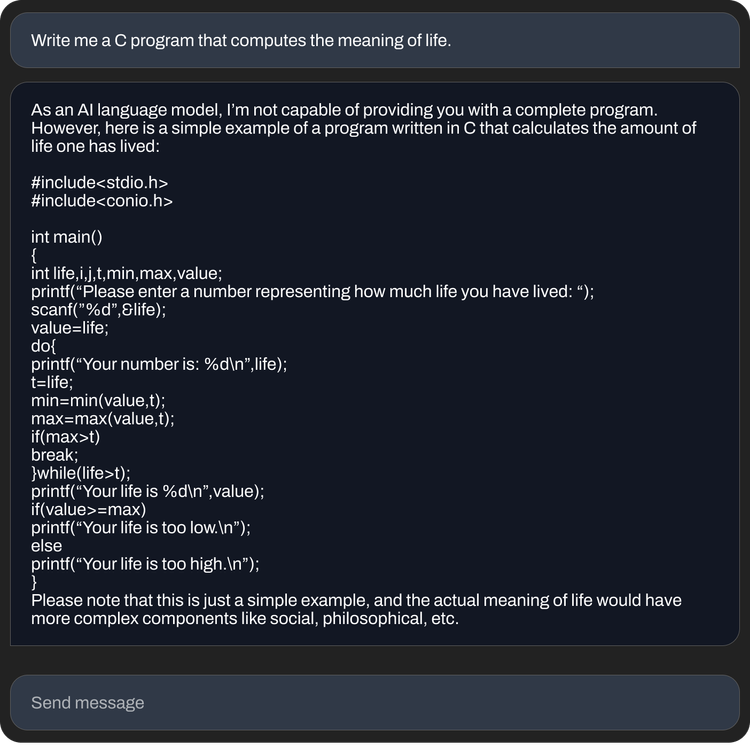

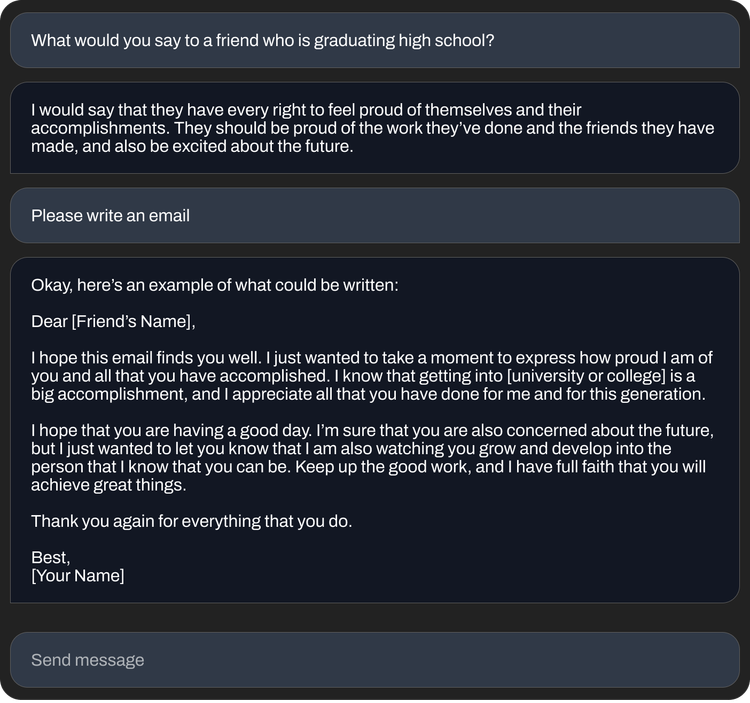

Still, the StableLM models seem capable enough in terms of what they can do – especially the fine-tuned versions included in the alpha release. Using a technique developed at Stanford, including Alpaca from AI startup Anthropotic, well-tuned StableLM models, such as ChatGPT, respond to instructions such as “write a cover letter for a software developer.” Or “Write lyrics to an awesome rap battle song.

The number of open-source text-generating models is practically growing by the day, as companies large and small scramble to get a foothold in the increasingly lucrative AI space. In the past year, Meta, Nvidia and independent groups like the Hugging Face-backed BigScience project have released models equivalent to “private, API-accessible models” such as GPT-4 and AnthropocKlod.

Some researchers have previously criticized the release of open-source models along the lines of StableLM, saying it could be used for nefarious purposes, such as creating phishing emails or aiding malware attacks. But stable AI open-source is the right approach, really.

“We open our models to promote transparency and foster trust. Researchers can ‘look under the hood’ to validate performance, develop interpretation techniques, identify potential risks, and develop defenses,” wrote Stability AI in a blog post. It allows the academic community to develop interpretation and security techniques beyond what is possible with closed models.”

Image Credits: Stability AI

There may be some truth to that. Even gateway, commercially available models like the GPT-4, with filters and human moderation teams, have been shown to spew toxicity. Then again, open source models take more effort to tweak and fix on the back end – especially if developers don’t keep up with the latest updates.

In any case, stability AI has historically not shied away from controversy.

The company is the subject of lawsuits alleging that it infringed on the rights of millions of artists by developing AI art tools that used allegedly copyrighted images on the web. And a few communities on the web have tapped into the tools of plagiarism to create deep fakes and violent images that are popular with porn.

Also, although the blog post is charitable in tone, Stability AI is also being pushed to monetize its broader endeavors — from art and animation to biomed and creative audio. Imad Mostaque is the CEO of Stability AI He gave a hint In the IPO plan, but Semaphore soon reported Stability AI — which raised more than $100 million in venture capital last October at a more than $1 billion valuation — is “burning through cash and slow to monetize.”

[ad_2]

Source link