[ad_1]

OpenAI is quietly launching a new developer platform that will allow customers to run the company’s new machine learning models, such as GPT-3.5, with special capabilities. In the screenshots of the document published on Twitter Users OpenAI, which has early access, describes its upcoming offering, called Foundry, as “designed for customers with large workloads.”

“[Foundry allows] Hint at the scale with full control over the model’s configuration and performance profile,” the document says.

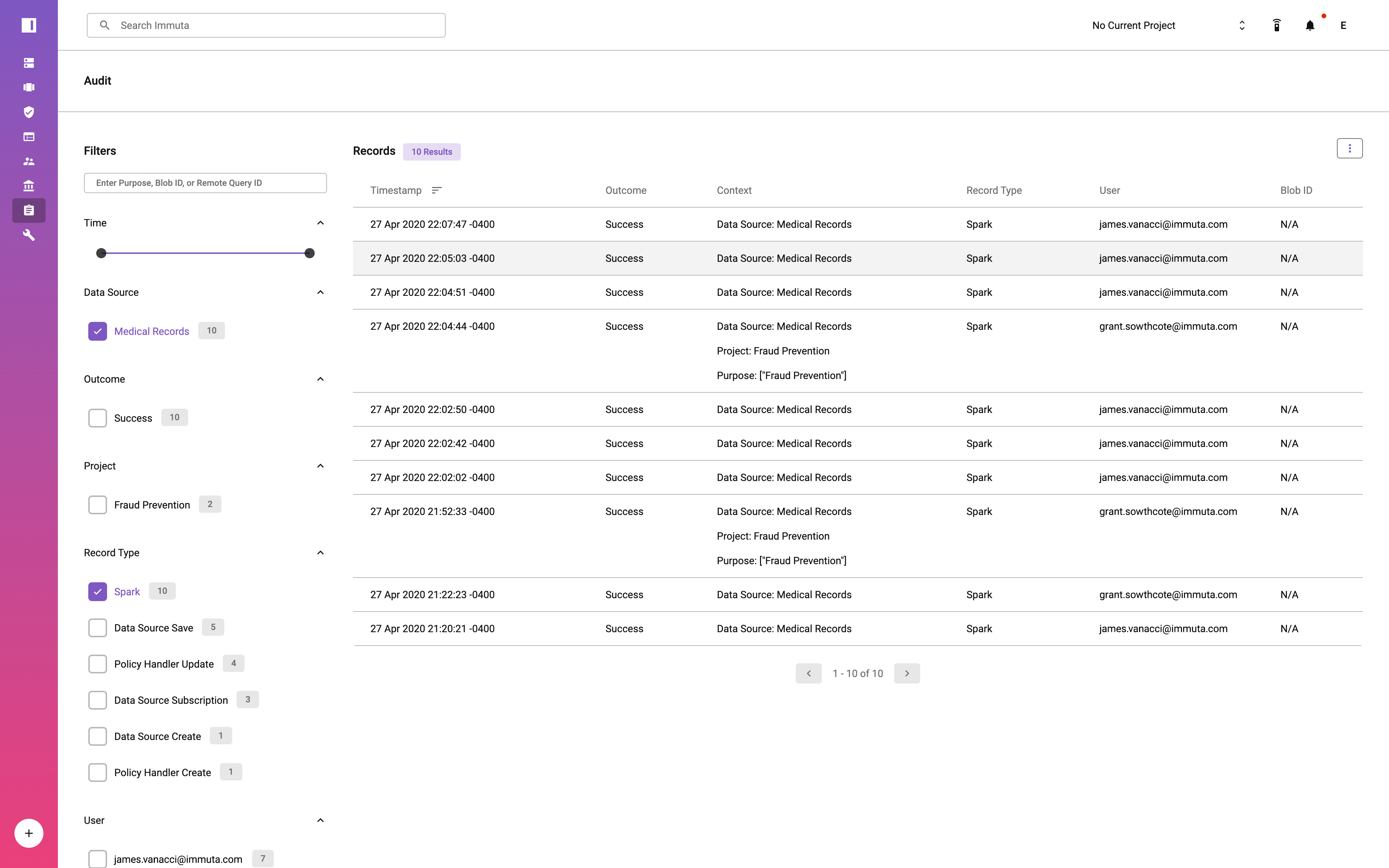

If the screenshots are to be believed, Foundry – each time it launches – will provide a certain “fixed allocation” to a client (presumably Azure, OpenAI’s preferred public cloud platform). Users can track specific instances with the same tools and dashboards that OpenAI uses to build and optimize models. Foundry also offers some degree of version control, allowing customers to decide whether or not to upgrade to new model releases, as well as “more robust” optimizations for OpenAI’s latest models.

Foundry offers service level commitments such as hourly and calendar engineering support. Rentals will be based on specific computing units with a three-month or one-year commitment. Running an individual model instance requires certain math units (see table below).

Examples don’t come cheap. Running the lightweight version of GPT-3.5 costs $78,000 for a three-month commitment or $264,000 for a one-year commitment. To put that in perspective, one of Nvidia’s latest-gen supercomputers, the DGX station, runs $149,000 per unit.

Eagle-eyed Twitter and Reddit users noticed that one of the text generator models listed on the template price chart has a maximum context window of 32k. (The context window looks at the text the model is looking at before generating more text; longer context windows allow the model to essentially “remember” more.) GPT-3.5, OpenAI’s latest text generation model, has a 4k high context window, a long-awaited secret new model. It suggests that it may be GPT-4 – or a stone leading to it.

OpenAI is pushing to turn a profit after a multi-billion dollar investment from Microsoft. The company It reportedly expects to earn $200 million by 2023.

Accounting costs are largely responsible. Training modern AI models can command millions of dollars, and running them is generally not cheap. According to Sam Altman, founder and CEO of OpenAI, ChatGPT costs just a few cents per chat to run OpenAI’s viral chatbot — no small feat considering ChatGPT has more than a million users as of last December.

In a move toward monetization, OpenAI recently launched a “Pro” version of ChatGPT, ChatGPT Plus, starting at $20 a month and partnered with Microsoft to create a controversial chatbot that’s gaining mainstream attention (to put it mildly). basis To Semafor and The Information, OpenAI plans to introduce a mobile ChatGPT app in the future and bring AI Language technology to Microsoft such as Word, PowerPoint and Outlook.

Separately, OpenAI continues to deploy its technology through Microsoft’s Azure OpenAI service, a business-oriented model-serving platform, and Copilot, a premium code generation service in partnership with GitHub.

[ad_2]

Source link