[ad_1]

Generative AI is disrupting. Industries – with understandable controversies.

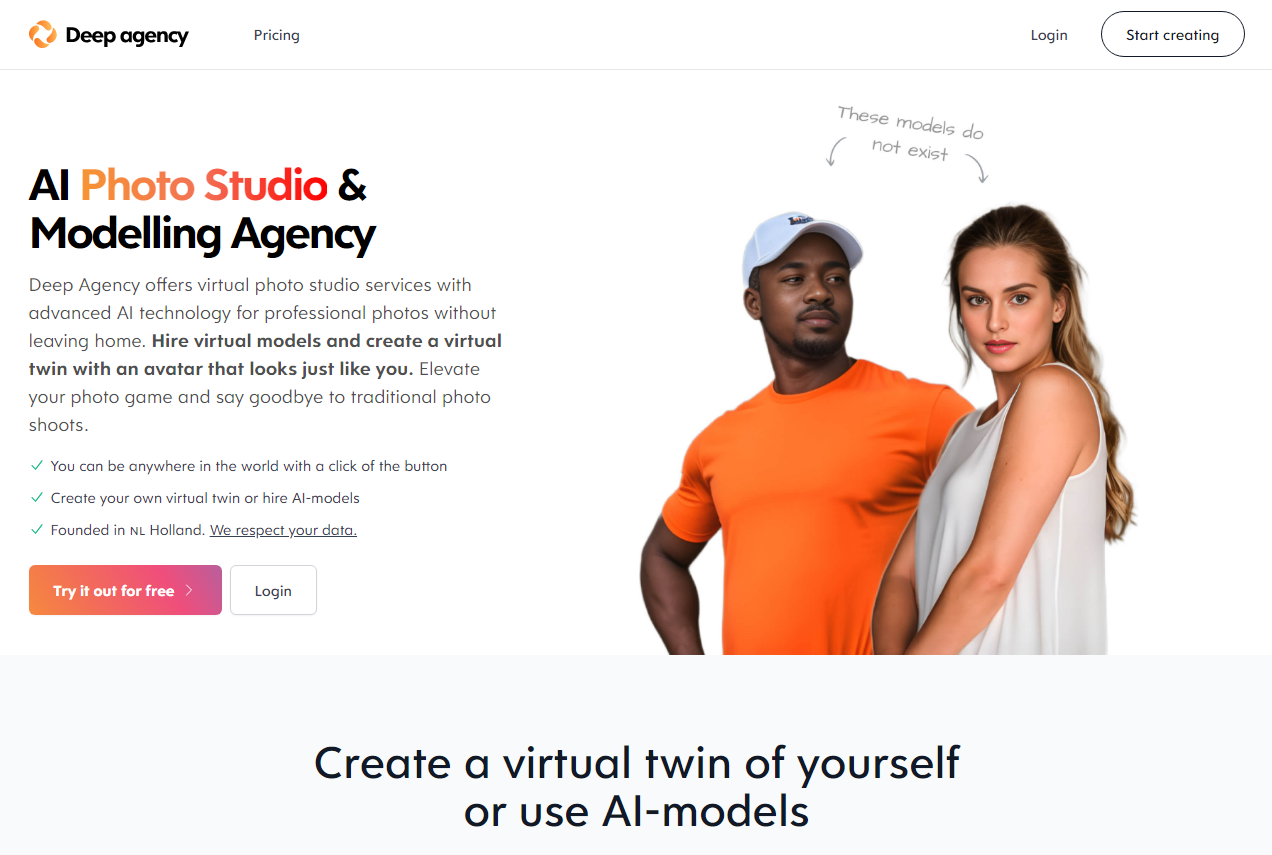

Earlier this month, Danny Postma, founder of Helmi, an AI-powered marketing copy startup recently acquired by Jasper, announced Deep Agency, a platform he described as an “AI photo studio and modeling agency.” Using art-generating AI, Deep Agency creates and offers “virtual models” for hire starting at $29 a month (for a limited time), allowing clients to place the models on digital backgrounds to make their photo shoots come true.

“What is Deep Agency? It’s a photo studio with a little bit of a difference,” Postma explained to A. A series of tweets. “No cameras. No real people. No physical environment… Why is this useful? Lots of things like automating content for social media influencers, advertising for market hunters and ecommerce product photography models.”

Deep Agency is in the proof-of-concept phase, which means it’s… pretty successful. There are many artifacts on the face of the models, and the platform puts in place barriers where physics can be generated – whether intentionally or not. At the same time, the creation of a deep agency model is surprisingly difficult to control; Try to create a female model dressed as a police officer, and a deep agency simply cannot do it.

Still, reaction to the launch was swift — and mixed.

Some Twitter users He applauded Stating interest in using the technology for modeling apparel and clothing brands. others Accused Pursuing a “highly unethical” business model, scraping other people’s photos and likenesses and selling them for profit.

The split reflects the broader debate over generative AI, which continues to attract staggering levels of funding while raising numerous moral, ethical and legal issues. According to Pitchbook, investments in generative AI will increase in 2018. It will reach $42.6 billion in 2023 and grow to $98.1 billion in 2026. But companies including OpenAI, Midjourney and Stability AI are currently suing generative AI technologies, some without properly compensating the artists.

Image Credits: Deep agency

Deep agency seems to have struck a particular nerve in the application and implications of the product.

Postma, who did not respond to a request for comment, isn’t shy about the fact that the platform can be competitive — and possibly hurt the livelihoods of real-world models and photographers. While some platforms like Shutterstock have created funds to share AI-generated art with artists, the Deep agency hasn’t taken any action — and hasn’t shown it wants to.

Coincidentally, just weeks after Deep Agency’s debut, Levi’s announced that they would be teaming up with design studio LaLaLand.ai to create custom AI-generated models to “increase the variety of models consumers see wearing the products.” Lewis said he plans to use synthetic models alongside human models and stressed that the move will not affect his hiring plans. But these models have historically had trouble finding opportunities in the fashion industry, prompting questions as to why the brand hasn’t brought the variety of features many models are looking for. (According to one study, in 2016, 78% of models in fashion advertising were white.)

Os Case, a doctoral candidate at the University of Washington, noted in an email interview with TechCrunch that modeling and photography — and the arts in general — are particularly vulnerable areas to generative AI because photographers and artists lack structure. Power. They are mostly low-paid, independent contractors for large companies looking to cut costs, Keyes notes. Models may, for example, charge high agency commission fees (~20%) as well as business expenses that often include plane tickets, group housing, and promotional materials needed to conduct business with clients.

“The Postma app — if it works — is really designed to take the chair out from under the already cautious creative staff and send the money to Postma instead,” Keyes said. “That’s not really something to applaud, but it’s also not that surprising… The fact of the matter is that in the social economy, tools like this are designed to bring more profit and focus.

Other critics argue with the underlying technology. State-of-the-art image generation systems, such as Deep Agency, are known as “diffusion models” that learn to generate images from text queries (eg, “bird sitting on a window”) that work their way through web-based training data. An issue on artists’ minds is the tendency of distribution models to deviate from the data — including copyrighted content — used to record and train images.

Image Credits: Deep agency

Companies promoting distribution models have long claimed that they are protected by “fair use” if their systems are trained on licensed content. (Under U.S. law, the fair use doctrine allows certain uses of copyrighted material without first obtaining permission from the rights holder.) But the artists sued that the models were infringing their rights, in part because the training data was obtained without their consent and permission. .

“The legality of such a startup is not entirely clear, but what is clear is that it aims to put a lot of people out of work,” said Mike Cook, an AI ethicist and member of the Knife and Paintbrush Open Research Group. He told TechCrunch in an email interview. “It’s hard to talk about the ethics of such tools without engaging with deeper issues related to economics, capitalism and trade.”

For artists who suspect that their art has been used to train a deep agency model, there is no method to extract that art from the training data. That’s even worse than platforms like DeviantArt and Stability AI, which offer ways for artists to opt out of contributing art to train the AI that generates the art.

Deep Agency has not said it plans to set up a revenue share for artists and others whose work helped create the platform’s model. Other providers, such as Shutterstock, are experimenting with drawing on a combined pool to compensate creators whose work is used to train AI art models.

Cook points to another issue: data privacy.

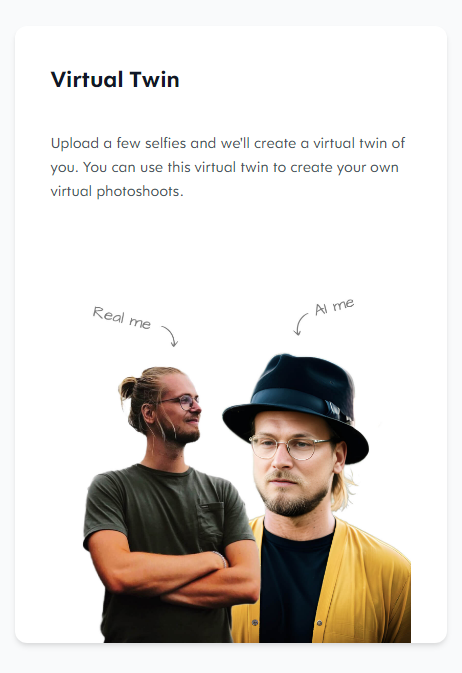

Deep Agency offers a way for clients to create a “digital twin” model by uploading around 20 images in different poses. However, uploading photos to Deep Agency will be added to the training data for the platform’s top models unless users explicitly delete them as described in the Terms of Service.

Deep Agency’s privacy policy doesn’t say exactly how the platform handles user-uploaded photos, if at all, or where it stores them. And there doesn’t seem to be any way to prevent fraudulent actors from creating someone’s virtual twin without their consent—a legitimate fear when it comes to shared nudity models like stable distribution.

Image Credits: Deep agency

“Their terms of use actually say, ‘You understand and acknowledge that the same or similar generations can be created by other people using their own interests.’ In fact, you realize that you can get the same image as someone else and that your photos can be used by others.I can’t imagine that many big companies like the prospect of these things.

Another problem with deep agency training data is a lack of clarity around the core set, says Keyes. That said, it’s not clear which images the Model Force Deep Agency was trained on (although the merged watermarks on the images give a hint). – This opens up the possibility of algorithmic bias.

A growing body of research has established race, ethnicity, gender, and other types of stereotypes in image-generating AI, including the popular stable diffusion model developed under the auspices of Stable AI. Just this month, AI startup Hugging Face and researchers at the University of Leipzig published a tool that shows models including Stable Distribution and OpenAI’s DALL-E 2 are asked to identify white and male-looking people, especially people in positions of power. .

According to Vice’s Chloe Xiang, Deep Agency only generates images of women unless they buy a paid subscription — which is problematic right off the bat. Moreover, as Xiang wrote, the platform tends to create In the pre-created catalog, whether you choose a female image of a different race or homogeneity, hair white female models. Changing the appearance of the model requires making additional, less obvious adjustments.

“Image-generating AI is fundamentally flawed, because the image-generating AI is based on the representation of the data it’s trained on,” Keyes said. “If it primarily includes white, Asian, and light-skinned black people, then the integration in the world is not representative of dark-skinned people.”

Despite the obvious issues with the Deep Agency, Cook doesn’t see it or similar devices disappearing anytime soon. There’s simply too much money in the space, he says — and he’s not wrong. Beyond Deep Agency and LaLaLand.ai, startups like ZMO.ai and Surreal are also garnering big VC investments for technology that generates virtual fashion models.

“As anyone using the Deep Agency beta can see, the tools aren’t great yet. But it’s only a matter of time,” Cook said. “Entrepreneurs and investors will continue to butt heads over opportunities like this until they find a way to make one of them work.”

[ad_2]

Source link