[ad_1]

In OpenAI’s ChatGPT line, it is said that large-scale language models (LLMs) are a black box, and indeed, there is some truth to that. Even for data scientists, it’s hard to figure out why, always, a model reacts the way it does, like creating a full suit of facts.

The LL.M. In an effort to peel back the layers, OpenAI is developing a tool that automatically identifies which LLM components are responsible for which behavior. The engineers behind it stress that it’s in its early stages, but the working code is open sourced on GitHub this morning.

“We’re trying. [develop ways to] Think about what the problems with an AI system could be,” William Sanders, manager of the translation team at OpenAI, told TechCrunch in a phone interview. We really want to know what the model is doing and whether we can trust the answers it gives.

To this end, the OpenAI tool uses a language model to learn the functionality of other, architecturally simple LLMs—especially OpenAI’s own GPT-2 classes (surprisingly).

The OpenAI tool tries to simulate the properties of neurons in LLM.

how is? First, a quick explanation of the background on LMLs. Like the brain, they are made up of “nerves” that perceive certain patterns in text and influence what the overall model “says” next. For example, regarding superheroes (e.g., “Which superheroes have the most important superpowers?”), the “Marvel superhero neuron” model can/does better name specific superheroes from Marvel movies.

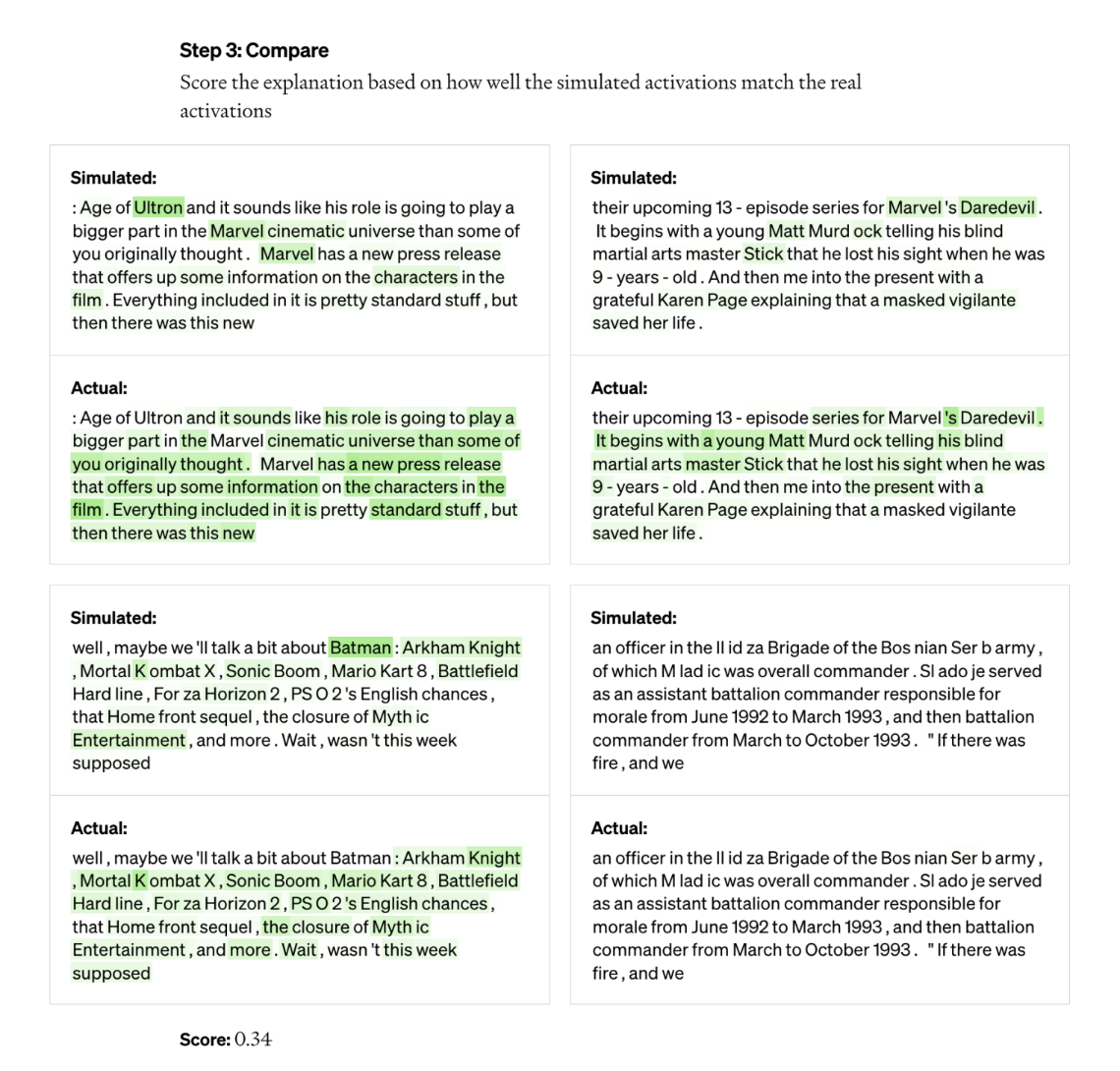

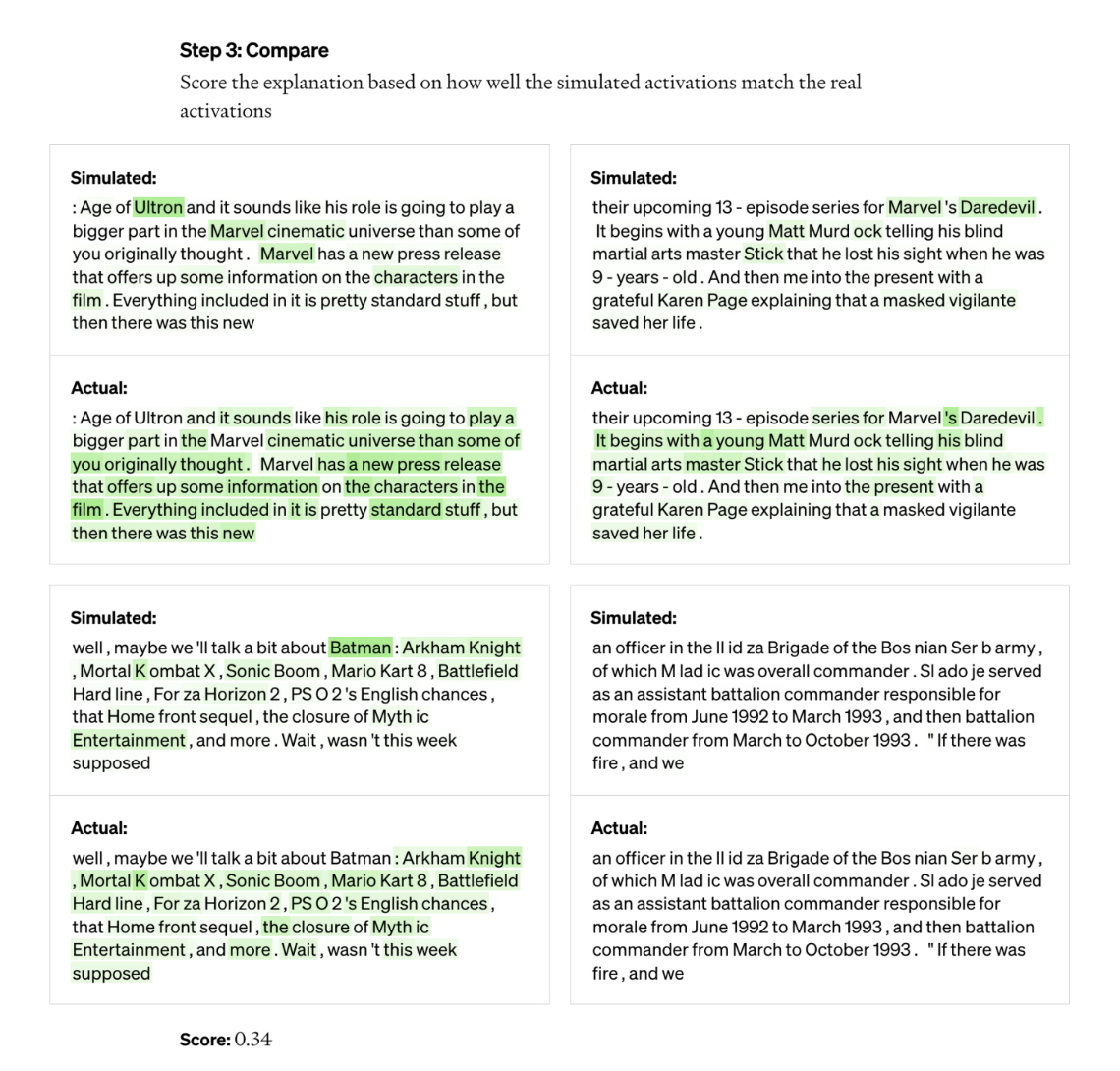

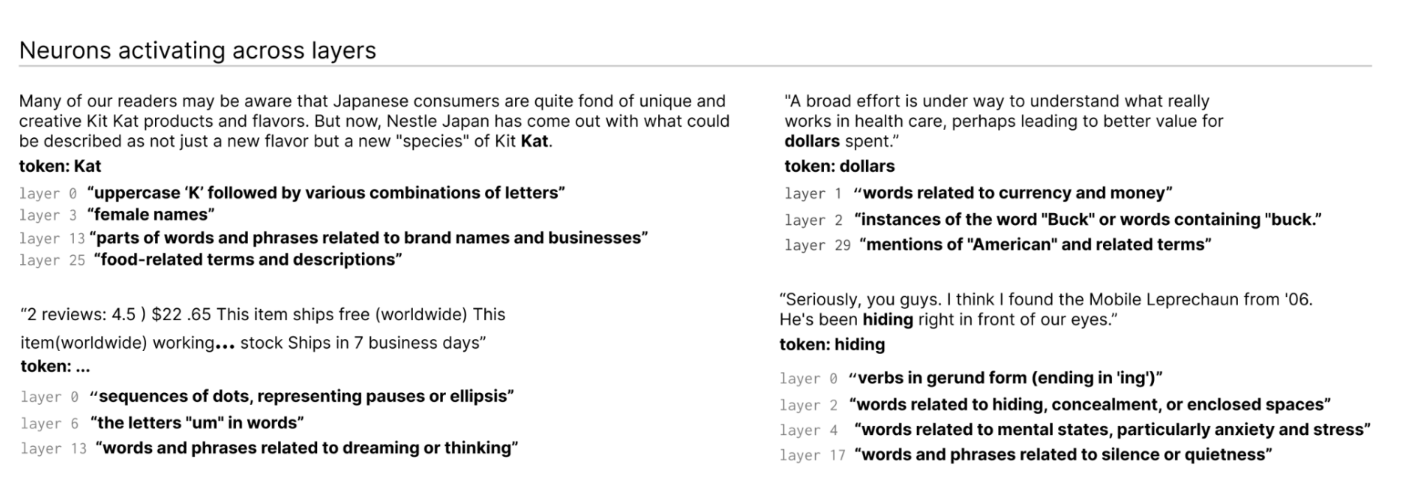

The OpenAI tool uses this configuration to divide models into their respective components. First, the tool runs a sequence of text in the model being evaluated and waits for cases where a certain neuron is “activated” repeatedly. Next, GPT-4, OpenAI’s latest text-generating AI model “shows”, these are the most active neurons and GPT-4 generates an annotation. To determine how accurate the explanation is, the tool presents GPT-4 in transcriptional sequences and can predict or simulate how the neuron works. It then compares the behavior of the simulated neuron with the actual behavior of the neuron.

“Using this method, for essentially every neuron, we can come up with a first-order natural language explanation of what it’s doing, and we’ll have a score of how closely that explanation matches actual behavior,” said Jeff Wu, who leads the Scalable Alignment team at OpenAI. “We’re using GPT-4 as part of the process to come up with explanations for the neuron’s needs, and then we’re measuring how well those explanations match the reality of what it’s doing.”

The researchers were able to generate annotations for 307,200 neurons in GPT-2, which they compiled into a dataset released with the tool’s code.

Such tools could one day be used to improve the performance of LMMs, the researchers say—for example, to reduce bias or toxicity. But they admit it has a long way to go before it’s truly useful. The device was confident in the description of about 1,000 neurons, which is a small fraction of the total.

A cynical person might also argue that it is primarily an advertisement for GPT-4, as the device requires it to run GPT-4. Other LLM rendering tools, such as DeepMind’s Tracr, a processor that translates programs into neural network models, are less reliant on commercial APIs.

Wu says that’s not the case – it’s just a “coincidence” that the device uses GPT-4 – and on the contrary it shows GPT-4’s weakness in this area. He also said it was not created with commercial applications in mind and could theoretically be adapted to use LLMs in addition to GPT-4.

The tool identifies active neurons in layers within the LMM.

“Most explanations are very poor or do not describe the behavior of the actual neuron,” Wu said. “Many neurons, for example, fire in such a way that it’s hard to tell what’s going on—like they fire on five or six different objects, but there’s no discernible pattern. Sometimes there is it. An intuitive design, but GPT-4 can’t find it.

This is to say nothing of more complex, newer and larger models, or models that can browse the web for information. But on that second point, Wu believes that web browsing doesn’t change much of the device’s basic mechanisms. Neurons can be easily tuned to learn why they decide to make certain search engine queries or access certain websites, he said.

“We hope this opens up a promising way to solve translation in an automated way that others can build on and contribute to,” Wu said. The hope, of course, is that we have good explanations not only of what neurons are responding to, but of the behavior of these models in general—what circuits they are computing and how certain neurons affect other neurons.

[ad_2]

Source link